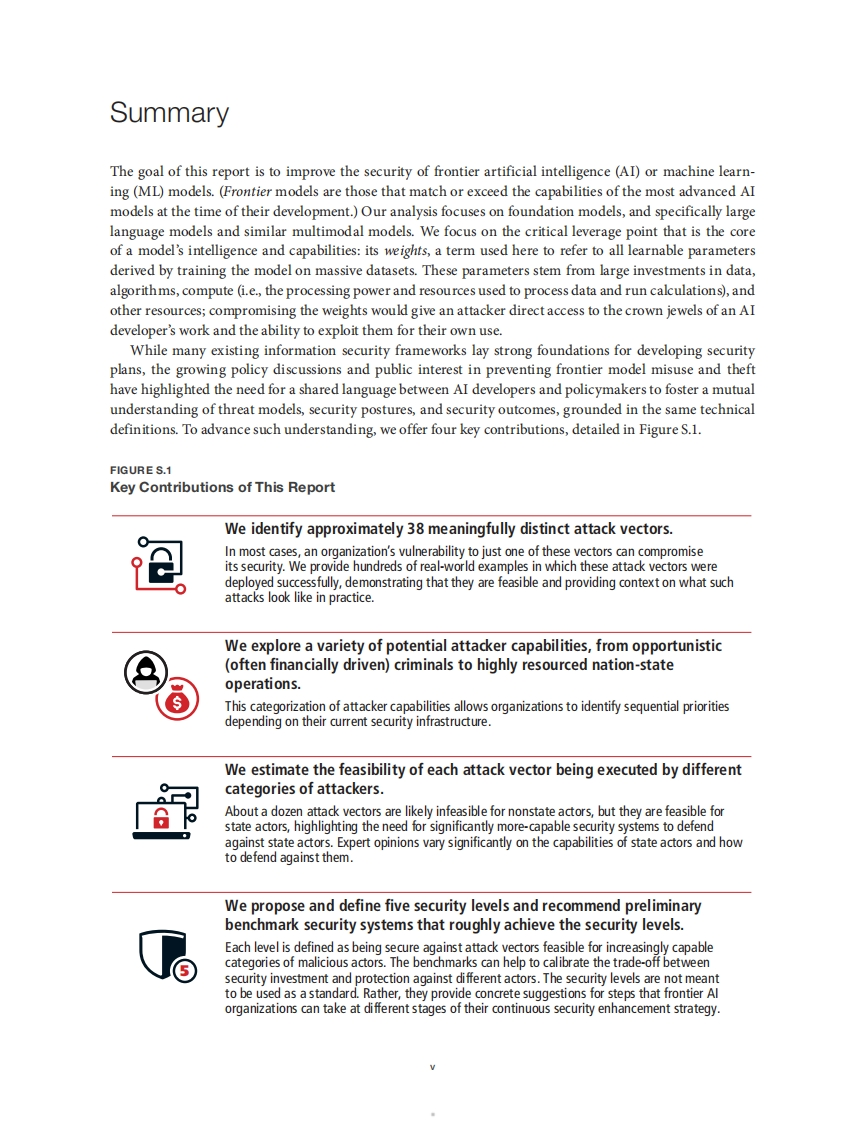

About This ReportAs frontier artificial intelligence (AI)models-that is,models that match or exceed the capabilities of themost advanced AI models at the time of their development-become more capable,protecting them frommalicious actors will become more important.In this report,we explore what it would take to protect thelearnable parameters that encode the core capabilities of an AI model-also known as its weights-froma range of potential malicious actors.If AI systems rapidly become more capable over the next few years,achieving sufficient security will require investments-starting today-well beyond what the default trajec-tory appears to be.We focus on the critical leverage point of a model's weights,which are derived by training the model onmassive datasets.These parameters stem from large investments in data,algorithms,compute (i.e,the pro-cessing power and resources used to process data and run calculations),and other resources;compromisingthe weights would give an attacker direct access to the crown jewels of an AI organization's work and thenearly unrestrained ability to abuse them.This report can help information securityteams in frontier AI organizations to update their threat modelsand inform their security plans,as well as aid policymakers engaging with AI organizations in better under-standing how to engage on security-related topics.Meselson CenterRAND Global and Emerging Risks is a division of RAND that delivers rigorous and objective public policyresearch on the most consequential challenges to civilization and global security.This work was undertakenby the division's Meselson Center,which is dedicated to reducing risks from biological threats and emerg-ing technologies.The center combines policy research with technical research to provide policymakers withthe information needed to prevent,prepare for,and mitigate large-scale catastrophes.For more information,contact meselson@rand.org.FundingFunding for this work was provided by gifts from RAND supporters.AcknowledgmentsWe thank the following experts,as well as those who did not opt to be named,for their insights and contri-butions to the ideas in this report:Vijay Bolina,Paul Christiano,Jason Clinton,Lisa Einstein,Mark Greaves,Chris Inglis,Geoffrey Irving,Eric Lang,Jade Leung,Tara Michels-Clark,Nikhil Mulani,Anne Neuberger,Ned Nguyen,Nicole Nichols,Chris Rohlf,Wim van der Schoot,Phil Venables,and Heather Williams.Notall consulted experts support all recommendations and conclusions in the report,and the views expressed in1 Author affiliations are as follows:Sella Nevo,Ajay Karpur,Jeff Alstott,Yogev Bar-On,and Henry Bradley (RAND),andDan Lahav(Pattern Labs).This is the finalresearch report following an interim report that was published in 2023:Sella Nevo,Dan Lahav,Ajay Karpur,Jeff Alstott,and Jason Matheny,"Securing Artificial Intelligence Model Weights:Interim Report,"RAND Corporation,WR-A2849-1,2023.

暂无评论内容